Adaptive Audio for AirPods isn't Amazing (yet)

Too many modes, too much choice.

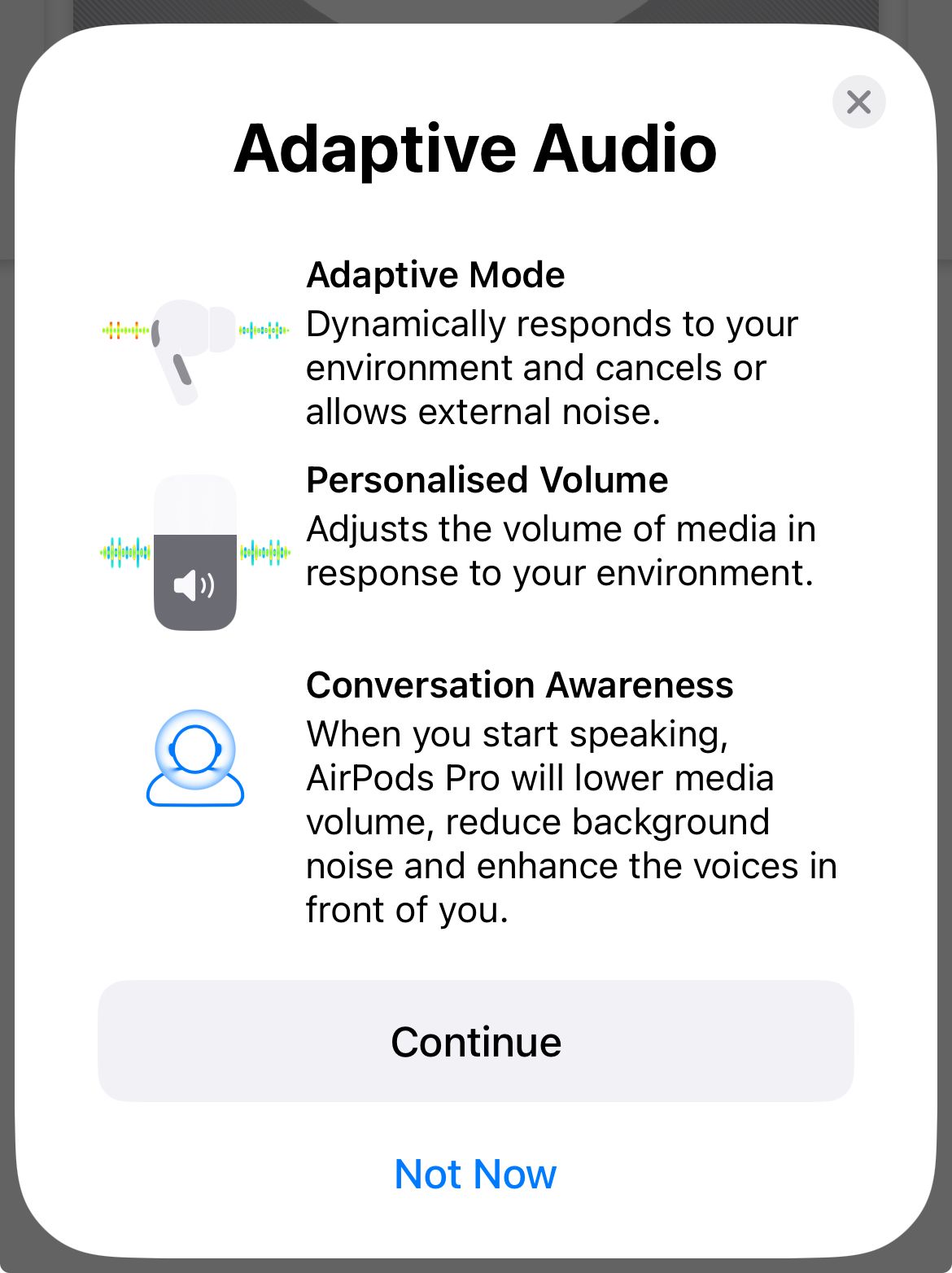

Upgrading to iOS17 brings a bunch of new features, including a new mode for AirPods Pro 2: Adaptive Mode. Apple describes the feature to be one that:

“Dynamically responds to your environment and cancels or allows external noise.”

In my testing over the past week, it's not quite there. Or perhaps, it's unclear to me which external noises are considered worthy of being cancelled or allowed, to use Apple's terms.

Now I'll admit I'm not an easy test case.

I've been trying this out during regular days cruising on the British waterways. This means occasional sounds of rushing water, banging and clanking noises from operating locks, or people that call out from other boats or the towpath.

What I've mostly found is that I notice every time Adaptive Audio shifts between noise cancellation and transparency. It could be a gust of wind rustling the trees, or me rummaging around in a box of items to find a windlass. I'll feel that eery shift of the pressure in my ear and suddenly extra sounds are coming in.

I use Transparency for most day to day tasks, reserving noise cancelling for loud planes, trains, or noisy cafes. Even if Adaptive Audio does its job properly, I can't figure out which scenario I want it to improve.

Conversation Awareness

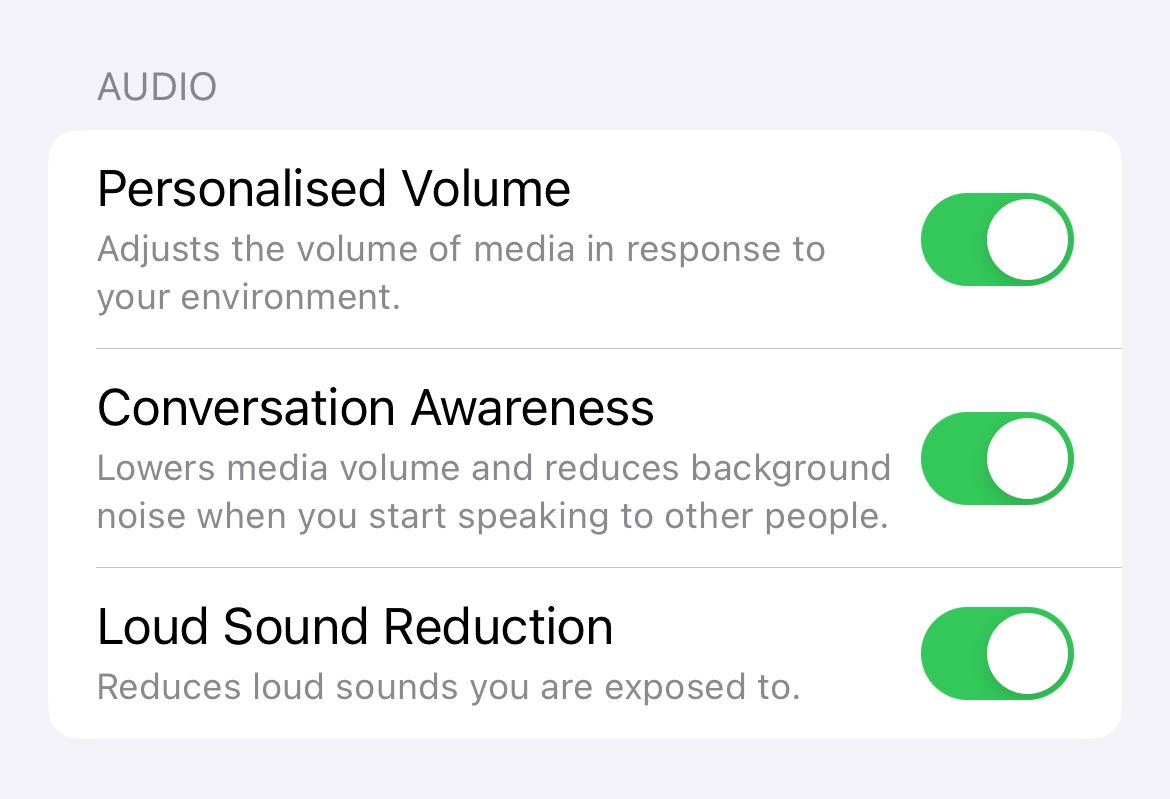

Secondly, and slightly confusing matters, is the fact that "Conversation awareness" is also new: but you can use that in any mode.

Conversation awareness has more value in my eyes (uh, ears): ducking the volume of music or a podcast when you're talking. It seems to be less about controlling the level of noise cancellation, and more about volume of your media.

But the obvious challenge is this: it only kicks in when I talk. If someone unexpectedly says something to me – a time it would be handy to function – there is no change. The feature works by picking up when I'm talking.

I'm not expecting magic here, as the real answer is near impossible - figure out when someone is speaking to me and lower the volume before I respond (usually with "sorry, what was that?").

Update Sep 20, 2023: since posting about this and the relatively different take from John Gruber, the most consistent feedback I have seen is this – “don't use conversation awareness if you have a pet”.

Too Much Too Soon

AirPods are an integral part of so many people's lives. No one was clamoring for these new features, and given that, I believe Apple should have been more cautious with this release and rolled out new functionality at different times.

Conversation Awareness is the clearest to explain, and makes the most sense to an average person. Once enabled, it works in all modes, and requires no input. This should have come first.

Loud Sound Reduction already existed, and mostly does its job without users intervening.

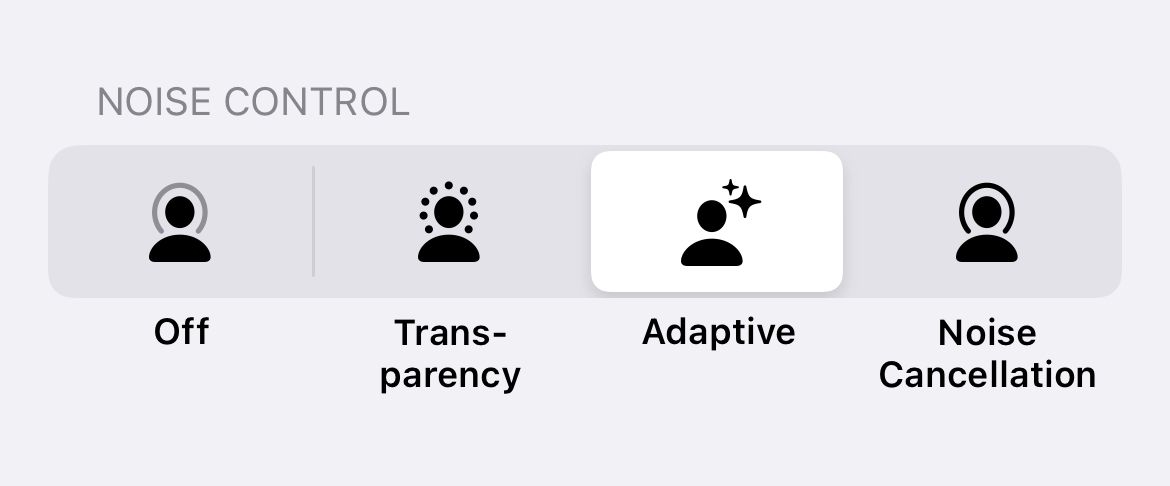

Adaptive Audio adds a burdens for the user: Now users have to decide which four modes to switch between when long-pressing the stem (Off, Transparency, Adaptive, Noise Cancellation).

It's long been my belief that "Off" shouldn't even be a mode for AirPods Pro. Transparency is effectively the same things as off for most users, and unless or until Adaptive Audio gets more intelligent, I'm not sure non-nerds are going to understand what the mode does.

But it's out now, and I'll be simply not using it unless or until it provides a clearer benefit.

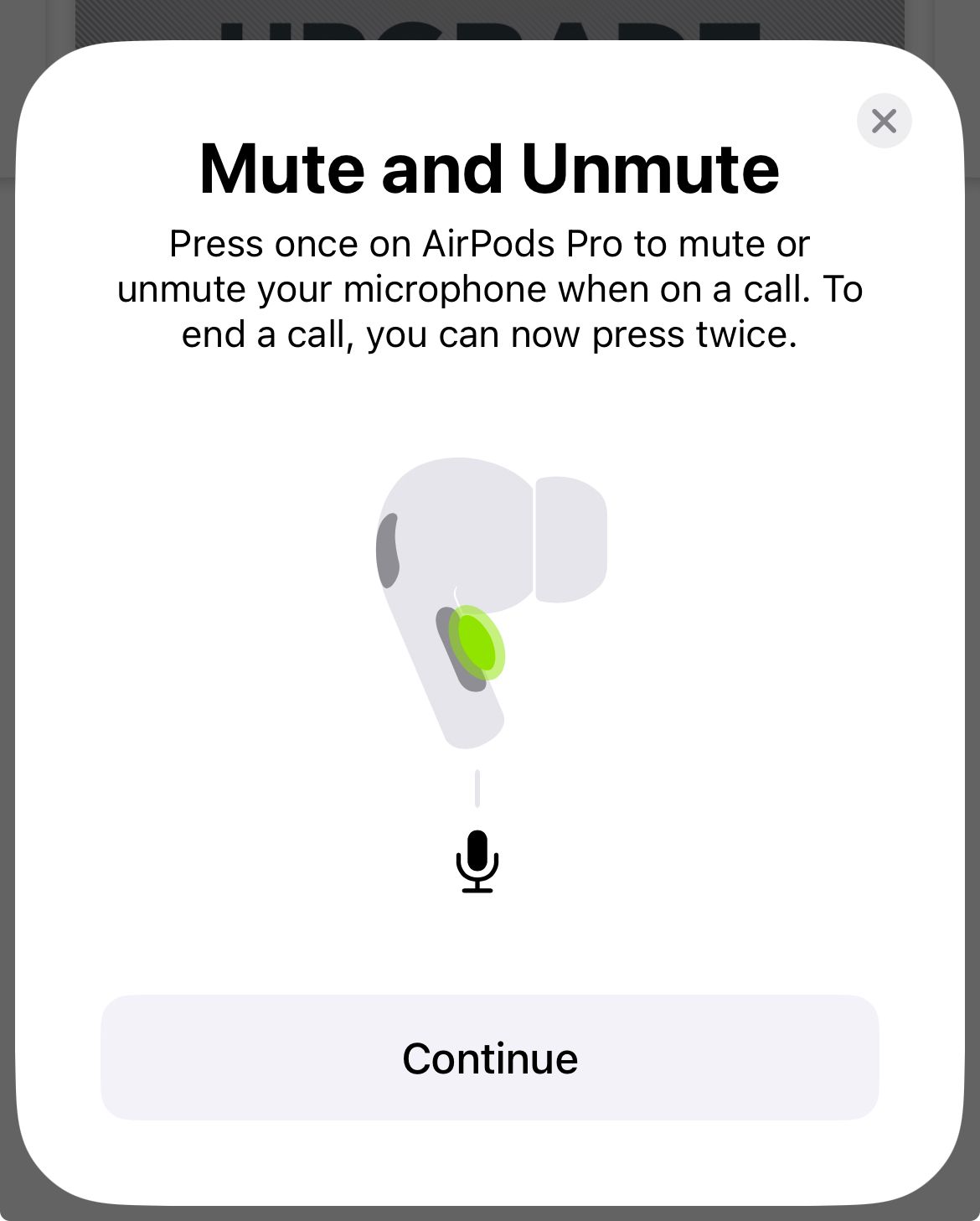

Addendum: AirPod Updates are better for calls

This is a good change.

Start/End call used to be single-press, and anyone that's been on hold for 45 minutes and accidentally hung up a call will know this isn't ideal.

Changing the behaviour so a double press is now needed to hang up a call is a welcome change, and this dialog explains it clearly.

For a more detailed guide on how to use Adaptive Audio, check this guide from Michael Potuck at 9to5Mac.